[Student IDEAS] by Karen Taubenberger - Master in Management at ESSEC Business School

This article discusses the rise of generative AI chatbots like Dr. ChatGPT in healthcare. The article explores the potential benefits and risks of this technology, and the challenges that need to be addressed for safe and effective use. It also highlights the need for new regulations to govern the use of generative AI in healthcare.

---

Accessing health information online has become common in today’s society, with half of European Union citizens actively seeking health information online.1 In addition to cheap, easy and quick access to medical information, online search empowers patients to actively participate in the diagnosis and treatment process.2 Publicly accessible chatbots like ChatGPT are poised to further revolutionize the way individuals seek answers to medical questions. But how reliable is the new ‘Dr. ChatGPT’?

ChatGPT has elevated online search to unprecedented heights. Open AI’s chatbot operates on a foundation of a Large Language Model (LLM), a deep learning technique that can comprehend and generate human language text. In a recent study, ChatGPT (GPT-4) showed impressive performance compared to human doctors in symptom review, with the appropriate diagnosis listed within the top three results in 95% of cases.3 This vastly outperformed traditional symptom checkers such as the health website WebMD.

Around 7% of Google searches are health-related, the equivalent of 70,000 searches per minute.4 Given the rapid user growth of ChatGPT, it is likely that ‘Dr. Google’ will soon be augmented or even substituted by ‘Dr. ChatGPT’. With nearly half of the European population showing low health literacy1, chatbots could significantly improve the consumer’s understanding of health-related information.

A direct comparison between ChatGPT and Google Search assessed their effectiveness in conveying the most relevant information for specific medical inquiries from a patient's viewpoint, while also evaluating patient education materials. While ChatGPT fared better when offering general medical knowledge, it scored worse when responding to questions seeking medical recommendations and guidance.5

Not only are consumers increasingly likely to use ‘Dr. ChatGPT’, the rise of generative AI may also drastically change the way medical professionals work.

Healthcare has traditionally been a sector heavily reliant on human-to-human interaction. However, with an estimated global shortage of 10 million health workers by 20306 and strained health system finances, healthcare delivery requires transformation. With the advancement of generative AI, there is potential to unlock a US$1 trillion improvement opportunity within the healthcare industry.

Generative AI, a subset of machine learning, trains models on existing data patterns to generate new yet similar data. This technology is uniquely suited to unlocking the vast potential of healthcare's disparate, unstructured data—clinical notes, diagnostic images, medical charts, and more. Generative AI has multiple benefits in a healthcare setting over traditional machine learning techniques (discriminative models): Unsupervised learning allows to tap into this rich data pool without the need for expensive data labeling. Moreover, it opens up new use cases compared to traditional machine learning methods, enabling text synthesis and the generation of synthetic data sets.

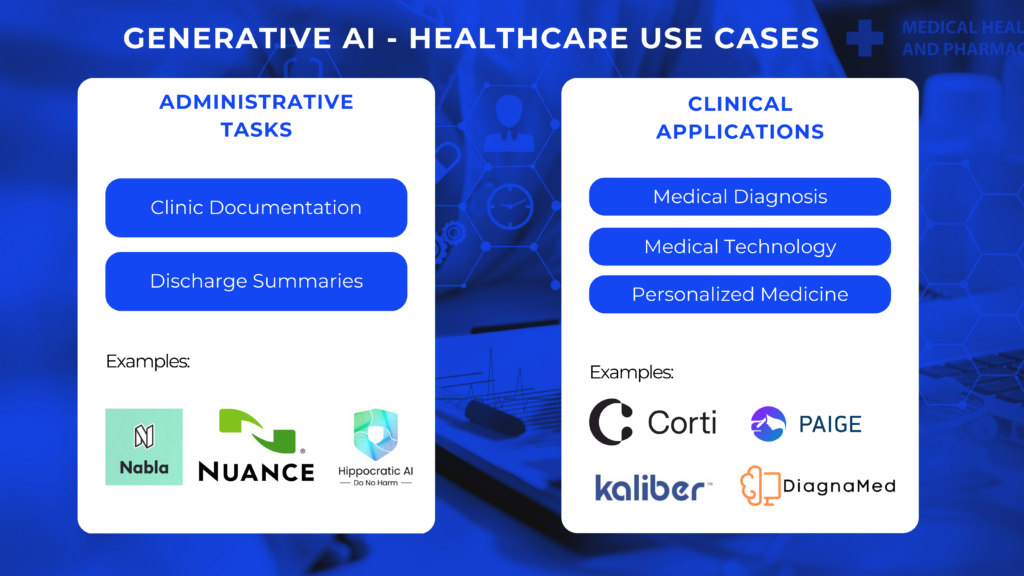

Use cases for medical professionals fall broadly into administrative and clinical applications (see Figure 1). Initially, the integration of generative AI is more inclined towards administrative tasks (such as clinic documentation and creating discharge summaries) due to their simpler implementation and lower associated risks. However, it's anticipated that generative AI will eventually be more extensively utilized in clinical applications (such as diagnosis) in later stages. With healthcare systems facing chronic staffing shortages, Generative AI could hold the key to significantly enhancing the quality of care delivery and transforming the entire patient journey.

1 Figure 1: Selected use cases of Generative AI in healthcare

Physicians devote around one-sixth of their working hours to administrative tasks.8 Digital health start-ups, like the Paris-based Nabla, offer solutions to reduce the paperwork burden. Their digital assistant, called Copilot, automatically transcribes consultations into the typically required document-based endpoints such as prescriptions, follow up appointment letters and consultation summaries. Nabla claims that doctors can save on average 2 hours a day using Copilot.9 Similarly Microsoft-owned Nuance Communications integrated GPT-4 into its medical note-taking tool. Not only will generative AI integration allow for process streamlining and cost reductions, lowering the administrative burden will also positively affect doctors’ job satisfaction.

This also creates room for new business model innovations. For example, Hippocratic AI recently introduced on-demand AI agents starting from USD 9 per hour. These task-specific generative AI healthcare agents offer various services, ranging from pre-op assistance to discharge assistance to chronic care check-ins. Healthcare providers, payors, and pharmaceutical firms can book the task-specialized agents on an hourly basis. The development, in close cooperation with NVIDIA, has overcome existing challenges of generative AI tools to connect with patients on a personal level. NVIDIA’s AI platform allows for super-low-latency conversational interactions, which are crucial for building empathy.10 According to Hippocratic AI’s own user research, every half-second improvement in inference speed increases the ability for patients to emotionally connect with AI healthcare agents by 5-10% or more. While NVIDIA brings the technology stack for speed and fluidity, as well as the deployment of the AI models, Hippocratic AI has developed leading safety-focused LLM models specifically designed for healthcare applications.

Generative AI's application will not only be restricted to healthcare administration. Clinical application is an even bigger field ready for disruption, although most use cases are still pre-commercialization.

Medical Diagnosis - The ultimate deciding factor on whether generative AI will be the doctor of the future is whether LLM-powered systems are able to make reliable and accurate diagnoses. Google computer scientists developed Med-PaLM, a LLM powered chatbot fine-tuned for the healthcare industry. Med-PaLM was the first AI system to achieve a pass mark (>60%) in the U.S. Medical Licensing Examination (USMLE) style questions.11 The chatbot was further optimized for diagnostic dialogue based on real-world datasets such as transcribed medical conversations. According to an article published in Nature magazine, Med-PaLM matched or surpassed the doctor’s diagnostic accuracy across six medical specialities and the generated answers ranked even higher in terms of conversation quality.12 Despite the promising initial results, AI tools will likely only assist doctors in their diagnosis for a long time rather than make them redundant. In September, Copenhagen startup Corti raised USD 60 million in a Series B funding round to accelerate the development of their AI assistant, which supports clinicians with patient assessment in real-time.13 Features include analyzing patient interactions to guide decision-making and providing “second opinions.” Unlike other copilots, Corti has built its own models instead of relying on generic models such as GPT-3 from OpenAI. The product is currently used to assess 100 million patients a day, and Corti claims it results in 40% more accurate outcome predictions.14

Medical Technology - Generative AI also has the potential to be directly incorporated into medical products. For example, it can drastically improve the accuracy of the technical diagnostic capabilities of medical software. The end-to-end digital pathology solutions company Paige has recently released PRISM, an oncology-specialized foundation model. Pre-trained on a large-scale dataset of 587,000 whole-slide images and 195,000 clinical reports, the model aids pathologists in cancer detection by automatically generating diagnostic summary reports.15 Generative AI can also be directly integrated into medical devices. For example, Kaliber AI is currently developing a generative AI surgical operating system that provides real-time guidance during surgical workflows and surgeon feedback.16 However, integration into medical devices is still pending regulatory approval.

Personalized Medicine - Another clinical use case of generative AI lies in developing personalized treatment plans and improving medication adherence, which is estimated to be around 50% in the US.17 By creating tailored interventions and digital reminders, AI can help patients stay on track with their prescribed regimens, ultimately leading to better health outcomes. This opens the door to a range of new application fields. For example, the Canadian startup DiagnaMed is currently working on a brain health platform leveraging generative AI.18 The tool estimates brain age by collecting neural activity data with a low-cost headset and calculates a health score with a proprietary machine-learning model. The tool then provides actionable points for precision medicine and personalized treatment plans for mental health and neurodegenerative conditions powered by OpenAI’s GPT models.

The successful integration of generative AI into clinical settings hinges on addressing the following concerns:

With more than 1 in 10 American healthcare professionals already using ChatGPT in their daily work14 and increasingly larger shares of the public turning to generative AI tools for medical advice, exercising caution is important. While results might be more accurate than a Google Search in the past, blindly placing trust in the medical advice by generative AI is not advisable. The WHO recently issued a warning urging for caution over the use of generative AI in healthcare.19

While generative AI models have excelled in standardized medical examinations, their performance in real-world diagnostic settings has been less consistent. A recent study published in JAMA Pediatrics suggests that ChatGPT’s diagnosis for kids are especially inaccurate with an error rate of 83%.20 To improve diagnostic accuracy, LLM should be specifically trained on medical data, like in the case of Google’s Med-PaLM.

New model architectures might also play a crucial role in developing more accurate generative AI tools for the healthcare industry. Hippocratic AI has taken a novel approach to improving the accuracy of their AI agents with a foundation model based on a one trillion parameter constellation architecture, called Polaris.21 Unlike a general large model, the constellation architecture breaks down into a primary agent that focuses on driving engaging conversations and several specialists (see Figure 2).

The primary language model is optimized for conversational fluidity, rapport-building, and empathetic bedside manner, while specialized support models enhance medical accuracy and safety. Each safety-critical task is performed through cooperation between the primary model and the corresponding specialist agents. This redundancy ensures that if the primary model fails or misses a task for any reason, the specialist agent ensures that the system continues to operate correctly and safely.

2 Figure 2: High level overview of LLM constellation architecture Polaris

The risk of AI hallucinations, where LLMs make up source data when faced with data gaps resulting in "unfounded diagnoses" can prove detrimental in a healthcare setting. Considering the high stakes in a healthcare environment, even a 5% error rate is not acceptable. Thus, generative AI should be integrated as a data augmentation tool under human supervision rather than operating in a fully automated manner.

This human-generative AI collaboration would make the critical thinking skills of medical practitioners more important than ever. In other settings, studies have shown that the reliance on generative AI tools significantly increases with workload and time pressure.22 It is not far-fetched to imagine situations where healthcare professionals might be tempted to simplify their work by following the recommendations of generative AI tools without further investigation. Various precautions could help prevent such situations. One idea is to use AI tools only after an initial diagnosis by a doctor. Another idea is to design AI tools with features that encourage a healthy level of skepticism, such as using a "robotic" voice.

The risk of an inaccurate diagnosis by AI tools will never reach 0%—just as doctors can be fallible. One of the biggest questions will be who is liable if something goes wrong. Today, it is the healthcare provider, but with technological advancements, legal responsibilities might shift. This raises concerns about whether a shift in liability might lead to over trusting AI tools. Therefore, promoting a clear distinction of accountability between the tool and the medical professional is crucial.

The inherent biases in generative AI models' training datasets pose a significant threat to healthcare equity. Notably, gender bias remains a pervasive issue, as sex differences in cell physiology, symptom presentation, disease manifestation, and treatment response have been historically neglected. The availability and quality of sex-specific data are uneven, especially in lower-income countries.

Consequently, closing the data gap is crucial for ensuring the development of generative AI models that produce reliable outcomes for both genders. Ignoring biased training data could lead to misdiagnosis in certain populations. Thus, implementing rigorous governance mechanisms is essential to proactively identify and mitigate potential biases throughout the AI development lifecycle.

Startups like Dandelion Health are providing bias checker solutions for developers of healthcare AI models. Data access is particularly challenging in the healthcare sector, where patient records are subject to strict privacy laws. Dandelion allows its users to cross-check performance across diverse types of patients by leveraging its massive, de-identified dataset from millions of patient records.23

A 2023 Pew Research Center survey revealed that 60% of American adults would feel uncomfortable if their healthcare provider would rely on AI for their medical care.24 Safeguarding patient privacy remains paramount in healthcare, necessitating robust data security protocols and anonymization techniques to ensure information safety.

In this context, the utilization of synthetic data, which mimics the statistical relationships of real-world data without revealing identifiable patient information, might allow for the protection of individuals' privacy. Generative AI models like GANs can synthesize electronic health record data by learning the underlying data distributions without revealing sensitive patient information. However, challenges with synthetic data remain, such as reproducing the biases of the underlying dataset and artificially inflating model performance due to data leakage.

Healthcare is one of the most strictly regulated industries. In Europe, the EMA (European Medicines Agency) acts as a reviewing body for member nations, while in the US the FDA (Food and Drug Administration) oversees the industry.

In the US, specific regulatory categories tailored exclusively for AI-based technologies are presently lacking. Generative AI falls in a grey area between medical information websites and medical devices. Medical websites like WebMD, which merely aggregate knowledge, are not FDA regulated. On the other hand, AI medical devices “that interpret patient information and make predictions in medium-to-high-risk domains”25 (also called, Software as a Medical Device - SaMD) require FDA approval.

The European Parliament passed the AI Act on March 13, 2024, establishing the world’s first major law for regulating AI. This sector-spanning law introduces new requirements for developers and deployers of AI-enabled digital health tools. However, the concrete impact of the AI Act is still ambiguous, and details will only emerge later through associated guidance, standards, and member state laws and policies.26

Overall, the regulatory landscape for generative AI in the medical sphere is still in a nascent state. While existing frameworks cover general AI use cases, LLMs (large language models) significantly differ from prior deep learning methods. Addressing the regulation of generative AI’s adaptive algorithms and autodidactic functions remains an open task. To ensure the safe and effective implementation as well as commercialization of Large Language Models (LLMs) in the healthcare sector, new regulatory frameworks are imperative. These regulations should make a clear distinction between LLMs specifically trained on medical data and those designed for non-medical purposes. For the successful integration of generative AI in healthcare applications, regulatory focus should centre on overseeing individual companies rather than scrutinizing each individual iteration of LLMs.

One important component for future innovation, as envisaged in the new AI Act, will be “regulatory sandboxes”.27 These will allow businesses to explore and test new AI products and services in a controlled environment under a regulator's supervision.

‘Dr. ChatGPT’ is rapidly gaining importance in the medical space and has significantly changed the way we search for medical information over the past year. To unlock further use cases for administrative and clinical applications, addressing pain points will be paramount. In the clinical space, models trained specifically on medical data such as Med-PaLM will play a more important role compared to general models such as ChatGPT. One thing is clear: Generative AI has the potential to significantly alter patients’ healthcare journey. The transformation potential in the healthcare industry is tremendous and expands even into the development of new drugs.