[Student IDEAS] by Qinglan Huang & Yiyang Zhao - Master in Management at ESSEC Business School

The article discusses the relationship between AI and environmental sustainability, emphasizing the need for "Green AI" to reduce AI's carbon and resource footprint. It covers strategies to improve energy efficiency in data centers, shifting to cloud and edge computing, optimizing algorithms, and ensuring the circularity of AI hardware components. It highlights the importance of regulations, transparency, and incentives to promote sustainable AI practices and the role of the EU in governing the green AI sector. The ultimate goal is to make environmentally friendly AI the industry standard.

---

When you think about “Artificial Intelligence” and “Green”, what connection could you make between these two fields? You may probably consider Artificial Intelligence (AI) and machine learning (ML) as a powerful tool to combat climate change. Indeed, AI has been used by governments, industries, and companies for collecting and computing data to prepare for change. However, with such unprecedented impact, it is imperative to examine the negative effect that massive AI adoption might have on the environment and climate in particular. While the potential of AI is frequently highlighted as a gamechanger for the transition in areas such as agriculture, building and mobility, the energy and resource consumption associated with AI computing is skyrocketing, along with a surge in estimated carbon emissions. What can it take to reduce this impact? And how might Multinational Companies (MNC), Small and Medium Enterprises (SME), and other AI players are working upon this green AI issue?

Green AI is an emerging field to tackle environmental sustainability for the use of Artificial Intelligence. Its primary objective is to develop tools and techniques to mitigate the carbon and resource usage of AI adoption. The document “A role of Artificial Intelligence in the European Green Deal”, led by the European Parliament, illustrated the urgent needs for regulation and governance to manage the dynamics related to AI in implementing environmental objectives. Various sectors are actively working towards creating suitable regulatory instruments for assessing and integrating the evaluation of AI's environmental impacts within the current European regulatory framework.

In addition to the incoming assessments and regulations around AI sustainability, numerous programmes and initiatives have also been implemented to incentivise sustainable business applications and create more market opportunities. Incentives like loans aligned with the future EU taxonomy or targeted ‘green’ adjustments to public procurement law have accelerated the empowerment of specific actors and the implementation of sustainable AI.

There is no secret that the use of AI requires vast amounts of energy, along with their life cycle emissions rather than just training data. For companies dependent on AI technology, the carbon emissions resulting from AI operations have become a significant and growing concern.

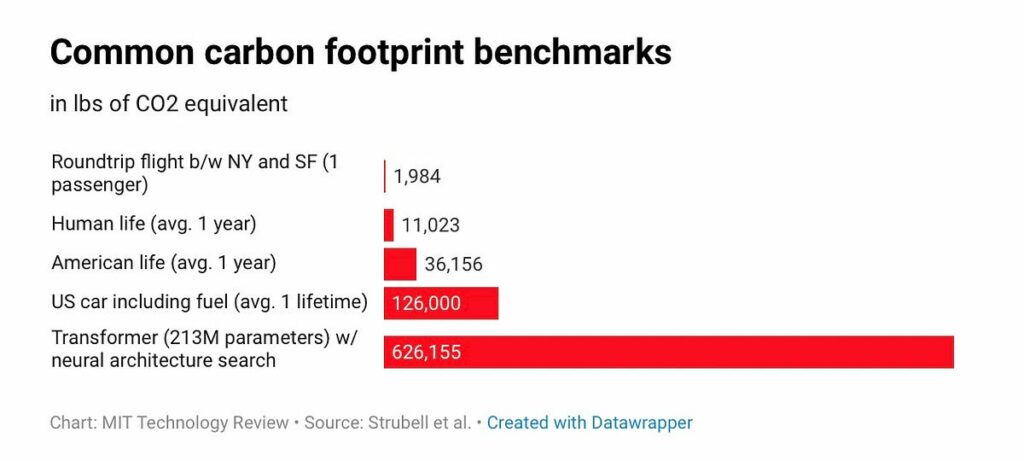

Training a machine learning system, for example, needs to simulate millions of scenarios and perform statistical analyses on them. These processes can go on for weeks and even months, consuming a lot of computing power. The MIT Technology Review reported that training one large AI model can emit more than 626,000 pounds of carbon dioxide equivalent - nearly five times the lifetime emissions of the average American car.

However, to accurately measure the footprint of AI is difficult. One has to determine first its power usage, which mostly funnels into the data centers that provide computational power to process, analyze and store vast amounts of datasets. In addition to the power for data center’s own use, cooling systems, such as air conditioning and liquid cooling, also require substantial energy consumption to prevent overheating caused by continuous operation of servers, storage systems, networking equipment, and maintaining optimal operating conditions.

Emissions related to data centers are mainly categorized as Scope 2 emissions for tech companies, meaning emissions from purchased electricity by the organizations. According to the International Energy Agency (IEA), data centers and data transmission networks alone account for about 1-1.5% of global electricity consumption. This percentage is expected to rise at a surprising speed as more organizations and industries adopt AI technologies.

Another big part of the emissions of AI usage is coming from the life cycle of hardware used. AI models run on hardware - computers that incorporate resources such as processors, memory, and networks. The embedded carbon emissions arise because there are energy uses in each of the life cycles of hardware used by AI, including extraction of materials, manufacturing, upstream transportation, and disposal.

The rise of AI emissions has been crucial for the tech sector to combat their carbon emissions, requiring them to develop a holistic and robust approach around green AI. In the subsequent study, we will identify the key pathways for green AI practices and the low-hanging fruit for companies to see impacts immediately.

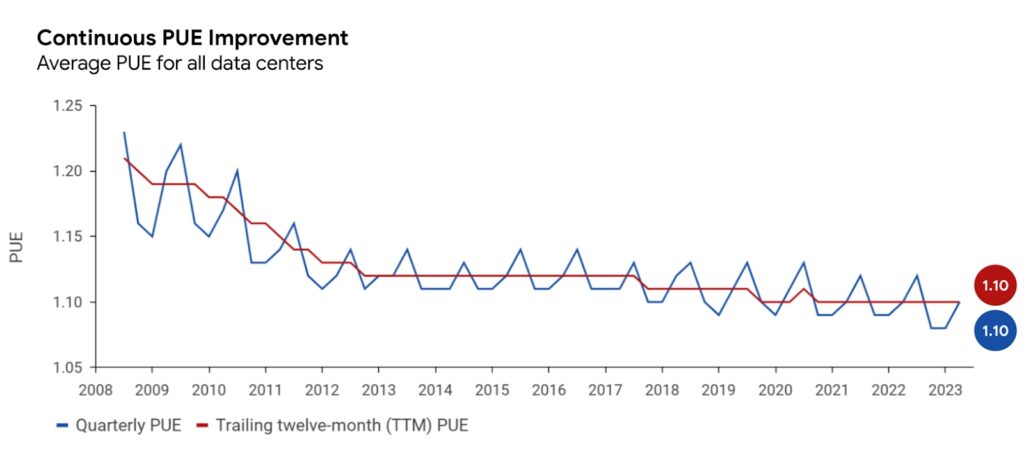

One way of abating carbon emissions of AI usage is to adopt next-generation infrastructure more efficiently. A simple approach is to improve the power usage effectiveness (PUE)1 of data centers used. This can be done by incrementally increasing current data centers or by replacing and adding data centers with the latest practices. One example is to shut down “zombie servers” - those that use electricity but no longer have workloads or data on them - consolidating workloads so that there are more per server, which reduces the number of servers and in turn enhances the utilization of a single server.

For large deployments, it is also feasible to implement advanced cooling systems that host the servers and data centers. Enterprises are able to simply move data centers to cooler locations or invest in new cooling technology, but this could be expensive. This has been implemented by Microsoft. They have built a Thermal Energy Center and developed a more efficient cooling system by leveraging the temperature difference between sub-ground soil, which remains constant year-round, and that of ambient air, which changes with seasons. This system is expected to reduce energy consumption by more than 50% of a typical utility plant.

Waste heat recycling of data centers could be another upgraded option. Microsoft's new data center in the Helsinki metropolitan area, which will use 100% emission-free electricity, is able to capture the excess heat generated from itself and send the clean heat for multiple use. Fortum, a power company in Helsinki, helps transfer the clean heat from the server to homes, businesses and public buildings that are connected to the local heating system. It reduces about 400,000 tonnes of CO2 emissions annually and brings an upgrade of community heating networks in Finland.

Improving the efficiency of data centers may sound glorious, but in fact, it is expensive and results in limited carbon decrease. A company would cut carbon emissions by only 15-20% by doubling their investment on existing infrastructure and cloud to reduce PUE, according to a Mckinsey analysis.

It is suggested that for most AI adopters, compared with improving their on-premise data centers, a much more efficient approach is to shift their business directly on cloud infrastructures. Hyperscalers (also known as cloud service providers) and co-locators have already invested massively in ultra-efficient data centers with PUEs of 1.4, 1.3 or even as low as 1.1 on occasion, whereas the current industry average is 1.57 for an on-premise data center. In recent surveys, researchers even found that running business applications on cloud infrastructure, rather than in on-premises enterprise data centers in Europe, could reduce associated energy usage by nearly 80%. Public cloud services providers such as Microsoft, Google, Amazon are generally much more efficient than those enterprise-operated servers in terms of their data center usage. However, for those small deployments that exist for a particular reason and cannot easily be shifted to the public cloud, it is suggested to consider other alternatives.

At the same time, cloud computing also brings drawbacks such as service downtime or significant slowdowns due to connectivity or network issues. This could lead to slower responses and undoubtedly increased energy consumption. The emerging Edge AI is well positioned to address this issue. Edge AI eliminates the need for connectivity between systems and allows users to process data in real time on local devices such as computers, Internet of Things (IoT) devices, or dedicated edge servers. As a distinctive disrupter, Edge computing reduces the need for high-performance computing infrastructure and thus leads to a significant reduction in energy consumption and carbon emissions.

Edge computing is increasingly seen as the next potential to compete with GPUs for the vanguard of the AI computing market. OmniML, an AI startup recently acquired by NVIDIA in a secret deal in February 2023, is a company that specializes in shrinking machine learning models to edge devices, enabling machine learning tasks to be 10x faster on different edge devices with 1/10th of the engineering effort. Last year, OmniML launched Omnimizer, a platform that can adapt computationally intensive AI models to low-end hardware. The adaptation bridges the gap between AI applications and edge devices and hugely increases the potential of the devices. As data storage and computation become more accessible to users, the adapted and hardware-aware AI is also faster, more accurate, energy efficient, and cost-effective. Edge AI plays a critical role in Green AI, enabling energy-efficient and decentralized AI systems.

To reach the overarching goal of green AI, it is comparably notable to develop more efficient models and algorithms accommodated to the progress of hardwares. As state-of-the-art machine learning is shifting to run on the edge devices, more constraints are introduced compared to traditional algorithm design approaches. This could also prompt the efforts in developing better models to represent data patterns and algorithms to fine-tune the model's parameters.

Approaches to reduce electricity usage can be identified in different stages of an AI system’s life cycle (e.g, data collection, training, monitoring). In the pre-training session, feeding structured data instead of large raw data can reduce the size of training sets by several orders of magnitude. With a baseline set, similar performance can be achieved with fewer training examples or fewer gradient steps. These reference frames set initially will require substantially fewer samples in the model. During the training, designing more efficient training procedures and inference can reduce the required model variants. In addition, the chosen model and algorithm are crucial in implementing a holistic view of computational power savings in the pre-training and training phase. For example, tree-based models with sparse features rather than neural networks in deep learning could serve as a viable alternative to mitigate energy use. A study in the International Journal of Data Science and Analytics in 2021 has shown that by selecting branches that require less computational efforts, the tree-based models can be 31% more energy efficient with minimal impact on accuracy.

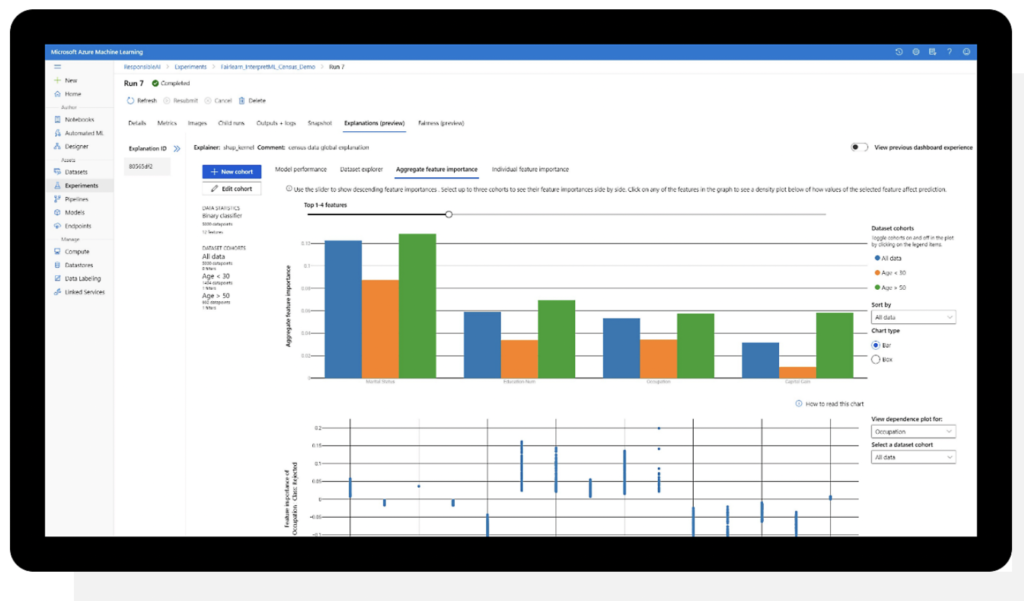

Reporting performance curves and building up consumption metrics will be the catalyst for making progress toward measuring and mitigating carbon footprint of algorithms. Training curves provide opportunities for future developers to compare at a range of different budgets and running experiments with different model sizes provides valuable insight into how model size impacts performance. Last year Microsoft, in partnership with the Allen Institute for AI and the Green Software Foundation, released energy consumption metrics within Azure Machine Learning, allowing developers to pinpoint the most energy-consuming work. The methodologies allow users to make wiser decisions about selection of models and highlight the stability of different approaches. It is also a vital step toward more equitable and green AI with clear and transparent reporting of electricity consumption, carbon emissions, and cost.

A majority of carbon emissions of AI usage is classified as scope 3 emissions under the category 2 Capital Goods, referring to the emissions related to long-lasting physical assets such as infrastructure and equipment. To reduce the emissions from manufacturing, transportation and disposal of components, it is essential to ensure that the components sourced from the suppliers are not carbon intensive from a life cycle perspective.

A key approach is to advance the life cycle assessments of components procured and adapt the sourcing strategy to favor more energy efficient hardwares. For instance, Microsoft has set a responsible sourcing strategy and ensured eco-design requirements for cloud hardwares. They have made sustainability considerations a key part of the entire Microsoft Azure hardware design process - including energy efficiency, repairability, upgradability, and durability. They have also taken initiatives around their zero-waste goal in managing cloud hardwares at their growing fleet of data centers.

Some companies have also taken similar measures to ensure the efficiency and circularity of their data center components, whereas current initiatives must be upgraded with more stringent policies and concrete targets set by the companies. AI players should constantly keep their engagements with their suppliers to incentivise them to innovate themselves and bring more carbon-free solutions to the market. A comprehensive circularity design guidance and a recycle scheme are also essential to reduce the resources to operate an AI system needed.

AI is likely to continue growing in the upcoming years both in terms of capacity and application. Given the growing attention on environmental sustainability matters brought by AI usage, companies are looking for quick wins that can be applied today. Whether a solution is on one hand low-hanging, on the other hand impactful, depends on its technology and market maturity, affordability, and abatement potential. It also varies with different types of AI stakeholders in the first place.

Switching directly to cloud or leveraging edge computing capacities would be a much better option than optimizing the efficiency of current data centers. Since the investments to improve efficiency could be costly and painful specifically for those AI adopters, companies relying on AI to improve their traditional business processes.

More efficient and centralized cloud service or edge AI is regarded as a great helper to reduce both environmental and economical cost.

A portfolio of Green AI tactics in choosing right models and algorithms will help mitigate energy consumption across the Machine Learning lifecycle. What needs to bear in mind is that small changes and conscious choices compounded in each step during all the different stages make a big difference in the final energy consumption. While the hardware is evolving to allow more decentralized and economical computing, upgrades of algorithms are expected to be adapted with power- efficiency and responsibility in mind, not just to be developed to stay at the bleeding edge.

For companies whose AI strategy is at the core of their business models, like Google and Microsoft, instead of transitioning to the cloud services they already possess, it is recommended for them to explore novel approaches for cooling infrastructures. Based on our analysis, the current PUE of the data centers is already low, while there is still a lack of well-established methods for cooling the operational computational power in a sustainable manner. For smaller deployments that must remain on-premise for a specific purpose, it is suggested to carefully evaluate the temperature and cooling conditions of the chosen location and circulate the heat generated back to usage. This will help ensure optimal energy efficiency for the cooling infrastructure in use, while remaining affordable and eco-efficient.

Of course, sourcing renewable power by signing PPA contracts with local utilities or generating green electricity by companies themselves is a universal option for AI players, which has already been adopted by many tech giants.

In terms of the life cycle emissions coming from the AI hardware, the primary step is to establish an inhouse Life Cycle Analysis (LCA) capability to better assess where the emissions come from to build AI infrastructures. Knowing that the majority of emissions comes from a single or a selection of components, companies can effectively act upon it.

A systematic approach is to integrate environmental and circularity metrics into their internal policies. With corporate policies that favor components with smaller footprints, the scope 3 emissions derived from the capital goods purchased within the value chain will be mitigated. This could also result in a virtuous cycle to encourage players in the whole value chain to develop more carbon-free solutions in the market.

To harness the full potential of AI without negative impacts on the planet, relevant stakeholders in the industry should be aligned on their definition of the concept. Rather than be vaguely elaborated by technical experts. Transparency is essential to make it actionable. By now, rather than accordant practices around sustainability developed by small and industrial AI players, we see a lot of variation of strategies and lack of data and information provided by companies. AI emissions should be important for tech players, but the actions are neither sufficient nor consistent enough to solve the problem. A shift toward more sustainable AI adoptions necessitates huge investments, proper market mechanisms and readiness, which by now, are yet to come.

We advocate for the essential role of regulations to intervene and drive transparency in the market. Green requirements for AI should be developed to ensure a minimum sustainability attribute of AI usage. Right incentives should be introduced to the market to encourage companies to go for cleaner AI solutions when making business decisions. Green certifications and resources could reduce the information asymmetry in the market and promote clear guidelines and standards for environmentally friendly AI practices.

The EU is a pioneer in governing the green AI sector. It has already set a tone in the development of a comprehensive regulatory framework and has provided concrete recommendations for AI players to mitigate their environmental impacts. But with the rapid speed of AI proliferation and the pressing environmental consequences in recent years, we anticipate more to come.

Green AI is not just a technological process to automate computation. It's how we collectively bring together all the brilliance and expertise to solve the world’s challenges: economical, environmental, social and legal challenges. Provided a clearer regulatory framework and more actionable guidelines for all relevant stakeholders in the industry, more solutions around green AI are foreseen to grow rapidly in the future. A more holistic and responsible mindset and joint efforts involved in AI development will produce a ripple effect. The ultimate goal is to trigger a paradigm shift so that sustainable Artificial Intelligence applications could become the norm for the whole industry.

[1] The power usage effectiveness (PUE) ratio is a metric of how efficiently a datacenter consumes and uses energy.

[2] Karen, H. (2019, June 9). Training a single AI model can emit as much carbon as five cars in their lifetimes. (MIT Technology Review)

[3] Vida, R., Emi, B., Brendan, R. (2023, July 11). Data Centers and Data Transmission Networks. (IEA)

[4] Gerrit, B., Luca, B., Anamika, B., Andrea Del, M., Jeffrey, L., Pankaj, S. (2022, September 15).The green IT revolution: A blueprint for CIOs to combat climate change. (Mckinsey Digital)

[5] Garcia-Martin, Eva & Lavesson, Niklas & Grahn, Håkan & Casalicchio, Emiliano & Boeva, Veselka. (2021). Energy-aware very fast decision tree. International Journal of Data Science and Analytics. 11. 1-22. 10.1007/s41060-021-00246-4.

[6] FORTUM CORPORATION PRESS RELEASE (2022, March 17). Fortum and Microsoft announce world’s largest collaboration to heat homes, services and businesses with sustainable waste heat from new data centre region

[7] 2022 Environmental Sustainability Report. (Microsoft)

[8] Peter, G., Anke, H., Jan Peter, S., Cara-Sophie, S., Cristina, U., Andreas R., K. and Sibylle, B. (2021, May 1). A role of Artificial Intelligence in the European Green Deal. (European Parliament)